Software Sabotage in Open Source Projects

Institute of Information Security and Dependability (KASTEL)

Advisor: M.Sc. Jan Wittler

Abstract. Recent incidents in the npm ecosystem, concerning the packages colors.js, faker.js, and node-ipc, gained a lot of attention because they were each sabotaged by their own maintainer. Due to the heavy use of open source dependencies and their recursive nature, such supply chain attacks can spread to thousands of other packages. These attacks are increasingly frequent and belong to the greatest cybersecurity risks nowadays. We explain how supply chain attacks work and discuss mitigation strategies. Furthermore, we ask ourselves, whether open source maintainers are responsible for the integrity of their projects, and if one can rationally trust open source software. Afterward, we come back to these recent incidents and analyze if the maintainers acted morally wrong and why they might have acted this way.

1 Introduction

Open Source Software (OSS) can be developed and published by anyone without restrictions. Thus OSS packages are not guaranteed to be benign. Nevertheless, developers often include many OSS packages in their projects to speed up the development process [10, 12]. They take this risk because they trust the reputation of the package authors and trust that the community will quickly find and fix arising issues [10]. But the problem is even bigger: The packages one depends on, can depend on other packages themselves. This means that by adding a single direct dependency, several other packages might be recursively included, so-called transitive dependencies. And a project can be infected if just one of those packages is malicious. Therefore, as the number of dependencies of an average software package is ever-increasing, so is its attack surface. By attacking some highly popular packages, one can possibly infect more than 100,000 other packages [34]. Such Software Supply Chain Attacks (SCAs) on OSS are increasingly frequent and considered one of the greatest cybersecurity risks nowadays [6].

Recently the incidents around the npm packages colors.js, faker.js, and node-ipc gained a lot of attention because they were each sabotaged by their own maintainer. Hence we especially focus on the npm ecosystem, around the popular programming language JavaScript. The maintainer of colors.js and faker.js sabotaged his projects because he was frustrated that even big corporations use them without paying him [30, 32]. Meanwhile, the maintainer of node-ipc sabotaged his project in such a way, that it would affect users whose geolocation was Belarus or Russia, as a protest against the war that Russia is waging in Ukraine [25, 31].

In this paper, we first discuss how SCAs work and how effective they can be. We analyze which strategies an attacker might take and discuss mitigation strategies. Afterward, we analyze the aforementioned incidents from a technical perspective. Besides that, we have to acknowledge, that developing software is not an ethically neutral practice [21]—especially not with OSS, as it is being developed in socially interacting online communities, like on GitHub. Therefore we also want to consider the ethical perspective of SCAs and these incidents in particular. As a foundation, we first introduce the ethical concepts of trust and responsibility and then discuss if maintainers are responsible for the integrity of their open source projects and if it is rational to trust OSS, i.e. to trust its maintainers to act responsibly. With those arguments, we return to the two recent incidents and discuss whether the behavior of these maintainers, who were also the attackers, is morally acceptable and what factors might have led them to act irresponsibly.

2 Open Source Software

In this section, we introduce the fundamentals of OSS. We first define the term OSS itself and then discuss dependency governance in general, and in the npm ecosystem in particular. Afterward, we discuss who uses and who contributes to OSS projects, and how widespread OSS usage is, i.a. in regards to npm.

2.1 Fundamentals

Let us start introducing the fundamentals of OSS by first defining the term itself. The The GNU Free Software Foundation (GNU) defines the term “Free Software” [1] and The Open Source Initiative (OSI) defines the term “Open Source Software” [2], which are commonly used synonymously [1]. For this paper it is sufficient to consider the following definition for OSS, which we adapted from both the aforementioned definitions [1, 2]:

Definition 1. Open Source Software means software whose license gives users full access to the source code and the freedom to run, copy and change it for any purpose, as well as the right to redistribute the software as is or as a component of another software, without any royalty fee.

To understand how OSS can be reused, let us now introduce the basics of dependency governance. OSS projects are published as so-called (software-)packages, which contain all the project’s resources, e.g. source code, executables, metadata etc. They can be uploaded to a (package-)registry, which is a server that stores packages, or rather multiple versions of them. OSS packages are hosted in public registries: Anyone can upload their packages to them and download packages from them.

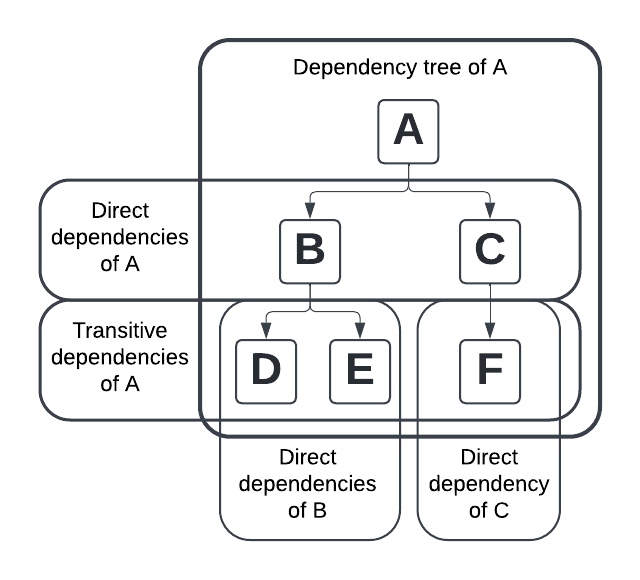

The packages that a package A directly (re-)uses in its codebase are its direct dependencies. Each of those direct dependencies can have (direct) dependencies itself. Therefore we define the transitive dependencies of A as the (direct or transitive) dependencies of the (direct) dependencies of A. By recursively traversing the dependencies of each dependency, one can obtain the dependency tree of A. We call this process resolving the dependencies of A.

To build package A, its dependency tree must be resolved and all packages in it must be downloaded from the registry and installed locally on the build system. Dependency managers are tools that automate this process [23].

To better understand these concepts we want to look at an example: In this paper focus on the ecosystem around the Node Package Manager, better known just as npm, which is the most popular dependency manager for the programming language JavaScript.

In npm, the developer defines all the package’s metadata in a JSON file named package.json. They must at least specify the package’s name, that uniquely identifies it in the npm registry and the current version according to semantic versioning [22]. The direct dependencies are also listed in this file, each with its name and version constraints. Auto-updates can be constrained individually for each package to: (1) a specific version (2) all newer patch releases (3) all newer patch and minor releases, or (4) all newer releases, including major releases

2.2 The Widespread Use of Open Source Software

Initially, OSS was a movement of altruist hackers developing software in their free time for the common good [3]. To this day, many individuals support and rely on OSS, but there is also an increasing number of companies adopting OSS [9, 17]. Two popular examples of commercial OSS adoption are Linux and Apache. They are the de-facto backbone of the internet nowadays, with about 65% of all websites running Apache [9, 15]. But companies are not only using OSS, there is also an increasing amount of companies “contributing their intellectual property for free to the open source community” [3]. Moreover, even public institutions and governmental agencies, especially in Europe, are increasingly interested in the use of OSS [9].

To further understand the popularity of OSS, let us return to our example: The npm registry provides OSS packages for nearly every imaginable situation, ranging from small utility packages to large frameworks and libraries [34]. On July 31, 2021 the npm registry alone contained over 1.8 million JavaScript packages, and over 21 million different versions of those packages. In 2021 alone almost 4 million new versions and over 400,000 entirely new packages were published to the npm registry [14].

3 Software Supply Chain Attacks

The European Union Agency for Cybersecurity (ENISA) threat landscape report 2021 states, that Software Supply Chain Attacks (SCAs) have become one of the major cybersecurity threats nowadays [6]. In this section we first define, what an SCA is, then we analyze the role that dependency managers play in SCAs. Thereafter we discuss some SCA strategies and how one might mitigate them.

3.1 Definition

First, we want to define what can be understood as malicious code:

Definition 2. “[Malicious code] implements malicious behavior including (but not limited to) exfiltrating sensitive or personal data, tampering with or destroying data, or performing long-running or expensive computations that are not explicitly documented.” [29]

Based on that we can define SCAs. The following definition is adapted from the definitions by Ladisa et al. [17] and Ohm et al. [23]:

Definition 3. A Software Supply Chain Attack is characterized by the injection of malicious code into a software package, in order to infect directly or transitively dependent software packages and comprise the systems using them.

3.2 The Role of Dependency Managers and Registries

As we have seen in Section 2.1, dependency managers make it easy to add OSS dependencies to a project. This leads to heavy use of OSS packages even for simple tasks. Especially in the npm ecosystem, it is quite common to rely on very small packages that just perform simple computations, so-called micropackages [34], or to depend on a large library and only use a single function from it [12]. Such dependencies can be caused by economic pressure to develop as quickly as possible. Although they may have short-term gains, each dependency increases the attack surface of a project [12].

In 2018, each npm package had on average 2.8 direct dependencies. Due to their recursive nature, each linear increase in direct dependencies leads to a super-linear increase in transitive dependencies [34]. In 2019, an average npm package had about 90 dependencies overall (direct and transitive) [10].

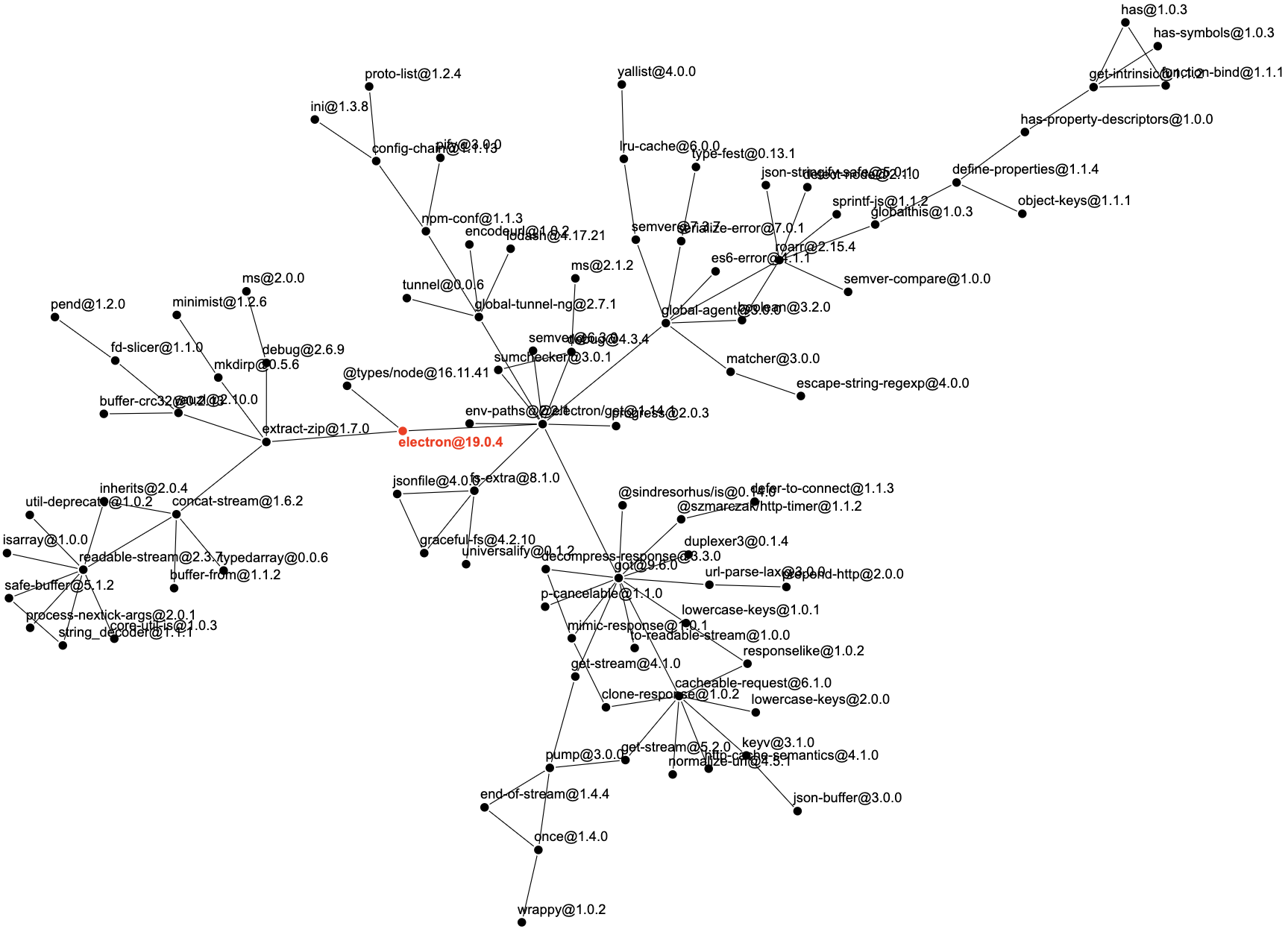

As an example, you can find the dependency tree of the famous electron package, which allows building cross-platform desktop apps using web technologies, in Figure 2. Although it only has three direct dependencies, this leads to 91 dependencies in total [4].

Another huge risk is that dependency managers can automatically update the dependencies, as described in Section 2.1. Upon installation of a package, for each dependency, an applicable version is resolved according to the version constraints. Thus when installing on different machines and/or at different times, different versions may be resolved. At first, it may seem more secure to lock all dependencies to a specific version, to avoid malicious updates being installed unnoticed. But that means updates need to be done manually—if forgotten, important vulnerability fixes might not be installed [34].

Furthermore, while npm’s openness is a major reason for its popularity and growth, it is also a huge risk. To publish a package to the npm registry, one only has to create a user account on the npm website. There are no restrictions or checks. The user who first published a package is automatically a maintainer, and can thus upload new versions at any time. Maintainers can also add other users as maintainers [29, 34].

Moreover, in the npm registry, it is not mandatory to provide a link to the repository, where the source code is publicly hosted. Attackers can abuse this to stealthily introduce malicious packages or package versions [29, 34].

We can conclude that while dependency managers and OSS registries automate the dependency governance and facilitate the use of OSS, they also pose a security risk.

3.3 Attack Strategies

In the following we will outline some relevant attack strategies: (1) The attacker creates a new package from scratch, that fulfills a real purpose. They advertise it, until it becomes popular and is used by a lot of downstream users. Then they publish an update including malicious code, that infects those pulling the latest version [17]. (2) The attacker publishes a malicious package that has a similar name to a legitimate package, for example by just dropping a hyphen, introducing name confusion. Some downstream users then pull the wrong package because they are not sure which one is the legitimate one or because of a typing error [17]. (3) The attacker injects malicious code into an already existing package, infecting downstream users who pull the latest update [17].

The last strategy can be very attractive for an attacker because some highly popular packages have more than 100,000 other packages depending on them [34]. One can thus reach thousands of users of the legitimate package and the packages depending on it.

But this strategy also requires access to the codebase of the legitimate package. Some strategies to gain access include: (1) becoming a maintainer of the project through social engineering [23]. (2) contributing code that seemingly solves an issue, but is malicious. This code needs to be accepted by a maintainer. One might hide its malicious parts by means of obfuscation [23]. (3) gaining access to the codebase by compromising the credentials of a maintainer [23].

There are also different strategies, for when the malicious code gets executed. (1) Malicious code can be placed in so-called install scripts which the dependency manager runs during the installation of a package [23]. Thus the attack aims at the developers using the package as a dependency. (2) Malicious code can be placed in tests. Thus attacking developers running those tests [23]. (3) Malicious code can also be placed in the regular control flow of the package, and thus is executed at runtime of the infected package [23]. This attack aims at everyone running the package, i.e. end-users and developers.

Furthermore, malicious code may always be executed unconditionally, or only if certain conditions are met. Conditional execution can be used to (1) hide the malicious behavior e.g. in test environments [23] (2) only infect specific packages [23] (3) only run depending on the victim package’s application state [23]

3.4 Mitigation Strategies

Now that we understand what SCAs are, we want to analyze a few strategies to mitigate such attacks. The first mitigation strategy that one might think of—and probably the most effective one—is manually reviewing the source code of each dependency one adds or version one updates to. But due to the huge amount of dependencies that an average npm package has (cf. Section 3.2) and due to the frequent release of new updates, this is unfeasible, as most of the literature agrees [17, 29, 34]. E.g. in 2019 approximately 29 updates per hour were published on npm [10]. Hence it is also unrealistic for the community to review all packages. Moreover, even if one could thoroughly review all packages one depends on, this strategy does not provide 100% security, since one can always overlook certain vulnerabilities.

Therefore researchers work on systems that can automatically and efficiently flag possibly suspicious packages. They are based on the observation, that many malicious package versions use certain functionalities that older, legitimate versions did never use. An example therefore are native Node.js libraries, which allow access to operating system level functionalities such as network or filesystem access. Another example is the JavaScript eval method, which allows code evaluation at runtime. Also, malicious updates often introduce new files, dependencies, or install scripts that have not been used before [10]. Garrett et al. [10] proposed a machine-learning-based approach to detect such anomalies that suddenly occur from one version to another. The algorithm is trained with the version history of several packages, and not tailored for each package [10]. Sejfia and Schäfer [29] expand on this approach and proposed their system, Amalfi. It also considers such anomalies as described above, but also incorporates mitigation strategies for the following observations: (1) “malicious package versions tend not to have their source code publicly available, in order to avoid detection” [29] (2) “attackers often publish multiple textually identical copies of one and the same malicious package under different names” [29] Therefore, Amalfi uses (1) machine-learning classifiers, trained on known malicious and benign packages [29] (2) a reproducer that tries to build a package from its source code [29] (3) “a clone detector that finds (near-)verbatim copies of known malicious packages” [29] Garrett et al. [10] tested their approach on 2,288 packages and found that their model reports 539 suspicious updates per week. They state, that even in the worst case, where all reported packages are not malicious, the review effort can be reduced by 89% [10]. Sejfia and Schäfer [29] find that the approach by Garrett et al. [10] flagged more packages than theirs. But one needs to consider that Amalfi has a larger scope [29] and they state that Garrett et al. [10] “did not triage the results in detail […]” [29]. They state that Amalfi “can detect a significant number of previously unknown malicious packages” [29].

In conclusion, one can say that machine-learning-based approaches can drastically reduce the number of packages that must be manually reviewed. But one has to keep in mind, that these systems do not flag suspicious packages with 100% certainty.

Let us now have a look at another approach. We already observed that many malicious packages use operating system level functionalities. Then again one can observe that a lot of packages published to npm fulfill simple purposes and do not require privileges like filesystem or network access [7]. Therefore, Ferreira et al. [7] “propose a permission system that sandboxes packages and enforces per-package permissions […]” [7], following the principle of least privilege. Such permission systems are e.g. commonly used in mobile apps and web browser extensions [7]. The developer of a package selects the permissions his package needs “from a small set of common and easy to understand permissions” [7]. Every time a user installs a new package or a package is updated, the list of permissions needed by that package is shown to them, and the installation or update is only performed if they grant those permissions. The system also ensures at runtime, that no package can use functionalities for which it lacks permission [7].

In their tests, Ferreira et al. [7] found that they could protect 31.9% of packages, while the average performance overhead of their system is, with much less than 1%, negligible [7].

4 The colors.js, faker.js, and node-ipc Incidents

Recently the SCAs on the npm packages colors.js, faker.js, and node-ipc gained a lot of attention, because they were attacked by their own maintainer. In the following, we want to analyze those incidents from a technical viewpoint.

4.1 colors.js and faker.js

The colors.js and faker.js incidents especially received attention, because these legitimate and widely used packages were sabotaged by their own maintainer, the npm user Marak (Marak Squires).

colors.js is used to print colored messages to the terminal. faker.js is used to generate fake data e.g. for use in tests [30]. colors.js regularly receives over 20 million downloads a week and was depended upon by over 19,000 packages at the time of the incident. Even highly popular packages by big corporations, like Amazon’s aws-cdk package and Facebook’s jest package, depended upon colors.js. faker.js is also quite popular, with more than 2,500 dependent packages at the time of the incident [30, 32].

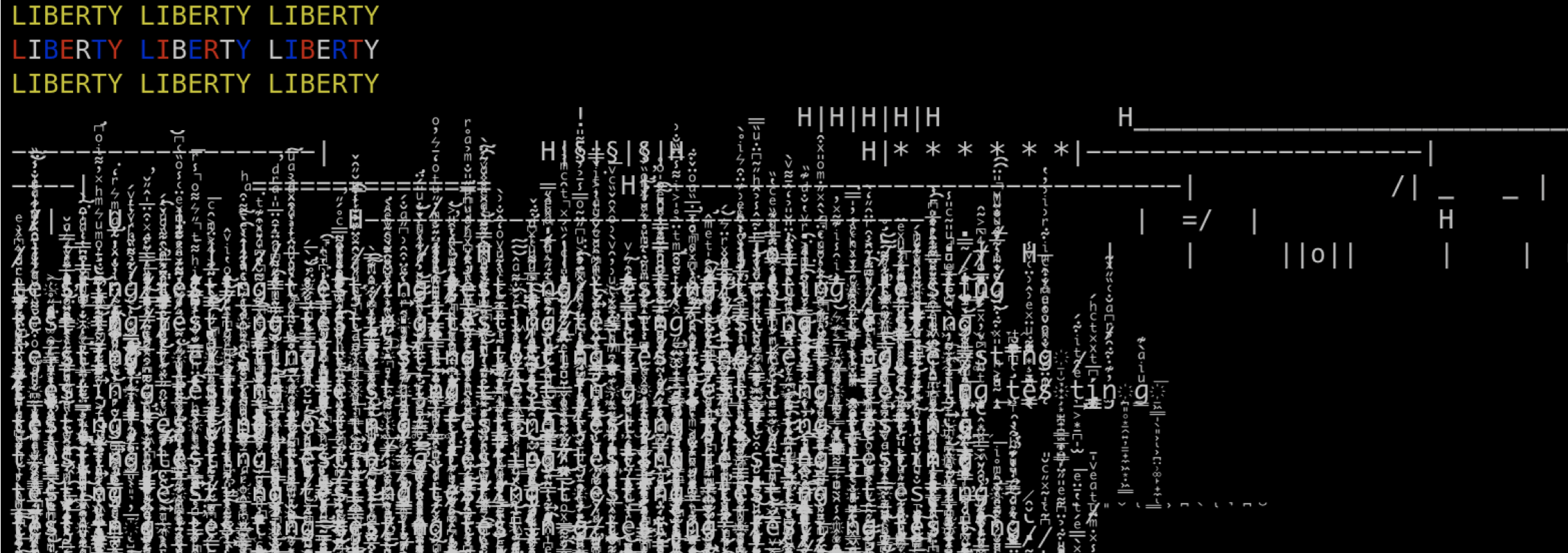

On January 5, 2022 Marak removed all source code from the faker.js repository via a forced commit with the commit message “endgame” and published an empty package as version 6.6.6 to npm [30, 32]. On January 8, 2022 Marak released the colors.js versions 1.4.1, 1.4.2 and 1.4.44-liberty-2 on npm. Those versions would print “LIBERTY” multiple times, before entering an infinite loop printing non-ASCII characters, so-called Zalgo text, to the console. An example output can be found in Figure 3 [30, 32].

With faker.js, it was obvious that the repository is empty, and even if automatically installed on a system, dependent packages would just not build, but there was no harm to the system. Therefore one could argue that this attack does not even suffice the criteria for malicious code (cf. Definition 2), respectively can not be classified as an SCA (cf. Definition 3). The malicious versions of colors.js on the other hand introduced an infinite loop i.e. performed long-running (in fact infinite) computations, without documenting this, and can thus be categorized as malicious (cf. Definition 2). Since these versions affected many dependent packages, it is an SCA according to Definition 3.

Let us further categorize the attack based on Section 3.3. The attack strategy was to infect an already existing package. However, access to the package was gained due to being the original maintainer of the package, which is what makes this attack so interesting. The infinite loop was placed in the regular control flow of colors.js and executed unconditionally. It thus aimed at anyone running the package, including end-users.

Next, let us discuss if the colors.js attack could have been prevented by the mitigation strategies presented in Section 3.4. The infinite loop could have easily been found by manually reviewing the update. But since the malicious colors.js version did not require any permissions that older versions did not need, machine-learning-based mitigation systems and sandboxing systems could not have prevented this attack.

4.2 node-ipc

The node-ipc package is used for local and remote inter-process communication on Linux, macOS, and Windows. It regularly receives more than 1 million weekly downloads on npm [25].

The npm user RIAEvangelist (Brandon Nozaki Miller) is the maintainer of node-ipc. On March 7, 2022 he published version 10.1.1 of node-ipc on npm. This version checks the geolocation of the system running node-ipc at random time intervals. If the geolocation is matched to either Belarus or Russia, random files on the system are wiped and overwritten with a single heart emoji [25, 31]. About ten hours later version 10.1.2 was published, with nearly no changes, also containing the malicious code. This was probably done so that dependency managers would pull the malicious update [25, 31]. On March 8, 2022 RIAEvangelist published a new package on npm called peacenotwar. In the package’s description he writes:

peacenotwar — README

This code serves as a non-destructive example of why controlling your node modules is important. It also serves as a non-violent protest against Russia’s aggression that threatens the world right now. This module will add a message of peace on your users’ desktops, and it will only do it if it does not already exist just to be polite.

Source: [28]

The same day he published version 10.1.3 of node-ipc that removed the malicious code. Shortly thereafter he published a new major version 11.0.0 which introduced a dependency on the peacenotwar package. In this version, every time a node-ipc function would be called, a message from the peacenotwar package would be printed to the console and a file would be written to the system’s desktop, containing information on the current war situation in Ukraine [25, 31]. On March 15, 2022 a patch version 9.2.2 of node-ipc was published. This version also added peacenotwar as a dependency and would run it whenever node-ipc gets imported. Also, RIAEvangelist introduced a dependency on the aforementioned malicious package colors.js (cf. Section 4.1). The release of this version was especially impactful, as the package @vue/cli, which is the command line tool of the highly popular JavaScript front-end framework Vue.js, depended upon the 9.2.x version range [25, 31].

Since some versions of node-ipc tampered with data on the user’s desktop, those versions are malicious according to Definition 2. And because the attack spread to many other packages, including the aforementioned vue.js, it can be categorized as an SCA in line with Definition 3. Based on Section 3.3, we can further categorize this attack. Similar to colors.js, the strategy was to infect an already existing package, with the specialty of the attacker being the original maintainer. The malicious code was also placed in the regular control flow, affecting developers and end-users as well. But the malicious behavior was only performed conditionally, based on the geolocation of the executing system.

Next let us analyze if the mitigation strategies presented in Section 3.4 could have prevented this attack. It could have been mitigated by manual review, but the changes were hidden by means of obfuscation, so one could have overlooked the changes. Moreover, since node-ipc had always been using native node.js libraries to make (remote) inter-process communication possible, the only observable anomaly would be access to the user’s desktop. Depending on how fine-grained a sandboxing system is and how good a machine-learning-based anomaly detection system is trained, these approaches could have averted the incident, but it is also possible that this attack would have slipped through.

5 Trust and Responsibility in Open Source Software

In this section, we first lay the fundamentals of responsibility and trust in ethics and technology ethics in particular. With this knowledge, we then discuss the responsibilities an OSS developer has and if one can rationally trust OSS.

5.1 Responsibility Fundamentals

In this section, we want to discuss what it generally means to be morally responsible. The concept of responsibility is central in technology ethics and there are several different understandings of it.

First, we want to differentiate between moral responsibility and causal responsibility. While moral responsibility is a normative relation, causal responsibility merely means causation. One can say “the campfire is responsible for the forest fire” [11, pp. 38], which only conveys that the campfire caused the forest fire. In contrast, moral responsibility “is about human action and its intentions and consequences” [21]. Since we want to ethically analyze the actions of OSS developers in this paper, we will hereinafter only talk about moral responsibility and mostly just refer to it as responsibility.

Moral responsibility has a prospective and a retrospective dimension. Responsibility gets prospectively assigned whenever actors have normative expectations towards themselves or other morally acting entities. A prospective responsibility thus describes what a person must do or fulfill in the future [11, 21]. Responsibility is retrospectively assigned whenever an actor criticizes themselves or another entity for their actions, intentions, or neglect. Determining retrospective responsibility involves determining who was at fault for an occurrence in the past [11, 21]. Both prospective and retrospective responsibility is a social relation. It describes which obligations or duties someone might expect someone else to fulfill. Through responsibility certain behavior can be corrected or encouraged [21].

Let us now discuss the requirements for responsible behavior. The famous philosopher Hans Jonas concentrated on the concept of responsibility in the context of technology. He formulates the bare minimum requirements for responsible behavior in his popular imperative:

Proposition 1. “Act so that the effects of your action are compatible with the permanence of genuine human life” [16].

More specifically, a person can be held responsible for their behavior (1) if that behavior has a morally significant outcome, (2) they are able to consider the consequences of that behavior, (3) are able to control their behavior, (4) that behavior was voluntary, based on their own authentic thoughts and motivations. They may not be held responsible for an action if they had no other choice than to act in the way they did. Similarly, a person must have some kind of influence on an event, in order to be held responsible for it [21].

5.2 Trust Fundamentals

Since the OSS community is fundamentally based on trust, we need to discuss what trust philosophically means. First, we need to understand that trust and trustworthiness are not the same. We can trust someone who is not trustworthy or distrust a trustworthy person. Therefore trust is an attitude one (the trustor) has towards another entity (the trustee). Trustworthiness is a property one can have, regardless of being trusted by others. Trust, however, requires that the trustor regards the trustee as trustworthy [20].

Furthermore, trust is only plausible if the trustor is able to develop trust and is optimistic that the trustee is trustworthy. Trust is well-grounded if the trustee is in fact trustworthy. However, trust can be justified even if the trustee is not trustworthy. Trust might be justified because it is valuable to trust someone in and of itself [20].

The trustor relies on the trustee being competent and motivated to do what they are trusted to do. Motives-based theories find that trustworthy people are motivated by their own interests to maintain the trust they were given. They do so out of self-interest or goodwill. Trustworthy people include the interests of the trustor into their own. Vice-versa we should only trust people that we can reasonably expect to include our interests into their own [20]. Non-motives-based theories regard the trustee as a morally responsible person (see Section 5.1). Therefore the trustor has not only predictive but also normative expectations of the trustee. The trustworthy person can have many different kinds of motives “including, among others, goodwill, ‘pride in one’s role’, ‘fear of penalties for poor performance’, and ‘an impersonal sense of obligation’ ” [33, p. 77] as cited by [20].

While trusting someone, the trustor risks, that the trustee might fail to do what they were trusted to do, or intentionally abuse the given trust and betray the trustor. The trustor can try to reduce these risks, by monitoring the trustee. But the more they do so, the less they trust the trustee [20].

Finally one might ask, whether trust can be rational. If one tries to eliminate the risks that inherently come with trust, it may be rendered into mere reliance. Also, the trust might make the trustor resistant to evidence against the trustworthiness of the trustee. That would mean trust can never be rational, but a counter-example is that we could say it is rational to trust emergency room physicians [20]. Therefore some philosophers define rationality differently in the context of trust: Trust can be rationally justified if the belief in someone’s trustworthiness can be rationally justified [20]. With this definition, we must ask if it is rational to derive someone’s trustworthiness from past experiences, or if one needs evidence for it. Gathering evidence might cease the trust of the other person or might not be possible at all since we are not able to look into the future [20].

5.3 The Responsibility of Open Source Software Developers

In this Section, we want to discuss the responsibilities that apply to OSS developers and why they may act irresponsibly. Most engineering ethics codes state in some way:

Proposition 2. Engineers shall conduct their profession with integrity and honesty and in a competent way, holding paramount the safety, health, and welfare of the public [8].

One can apply these requirements to responsible software engineering as well. According to engineering ethics codes, engineers also have responsibilities toward their clients [8]. Since most OSS developers are unsalaried volunteers [24], one might argue that the users of their OSS package must not be equated with clients in the business sense, and therefore there does not exist a responsibility towards those users.

This may hold to some extent. A developer who maintains a OSS package in their free time, not receiving major funding, may not be responsible for actively maintaining the package, as long as they make it clear to the users, that the package is in fact not actively maintained, and thus may contain unfixed vulnerabilities. On the other hand, we need to ask if they are still responsible for fixing dangerous, known vulnerabilities that pose a risk to many dependent systems or even their human users. In line with Hans Jonas’ imperative (Proposition 1), they should be held responsible if their package harms a human being due to a vulnerability they could have averted (cf. Section 5.1). However, if a developer actively maintains a package, or implies to the users of that package, that it is actively maintained, one might argue that the aforementioned responsibilities of engineering ethics codes apply to them. This would mean that they are then in fact responsible for the integrity of their package, and moreover, the users of that package, who trust them and thus normatively expect them to act responsibly.

In the incidents we discussed earlier, the maintainers abused the trust they were given by performing a SCA on their own package. That makes one wonder why they did not feel the normative, social pressure, posed by their users who trust them (according to non-motives-based theories, cf. Section 5.2). First, a OSS developer must be aware, that developing software is not an ethically neutral practice [21]. Further, “computer technologies can obscure the causal connections between a person’s actions and the eventual consequences” [21]. The consequences might unfold some time in the future (temporal distance) and/or at a faraway location (physical distance) [21]. The consequences might also affect users that the OSS developer does not personally or even remotely know, due to the anonymity the internet provides and due to the possibly huge amount of users. Therefore, the sense of responsibility a OSS developer feels might be reduced, since they might not fully understand the consequences of their actions [21]. It is also important to consider that the actions of a OSS developer might be influenced by “hierarchical or market constraints” [21] or by “economic or time pressures” [5]. This might lead to laxity or acting irresponsibly.

5.4 Trust in and Trustworthiness of Open Source Software

As we have seen in Section 2.2, many individuals and companies rely on OSS and even institutions increasingly adopt it. Descriptively, this means that they trust OSS, implying that OSS is in fact not only widely used but also widely trusted. In Section 5.2 we concluded that the trustor, in this case, the OSS users, must believe the trustee, in this case, OSS, to be trustworthy. In the following, we want to discuss if it is rational to trust OSS.

One can say that trust in OSS is well-grounded if we can expect it to be secure. People that advocate for the secureness of OSS often state the Principle of Many Eyes, also referred to as Linus’s Law: “Given enough eyeballs, all bugs are shallow” [26]. This means that the more developers read the source code of a package, the more secure it gets because each bug will be spotted by at least one developer. If Linus’s Law was indeed true, OSS would be invulnerable, because the community would over time spot all bugs and vulnerabilities [13]. But we can observe that OSS packages are still vulnerable [14, 23], e.g. up till July 31, 2021 2.2% of npm package versions contained known vulnerabilities [14]. One reason for this is that OSS packages are not static but constantly evolving and getting new features. Another reason might be that not all contributors have the required competence to spot certain vulnerabilities. Also, some people might be discouraged from contributing for various reasons, like lacking time or funding [13]. So we can conclude, that trust in OSS is not well-grounded.

We also need to consider, that trusting a OSS package in fact means trusting its contributors, and especially its maintainers. Trusting OSS can therefore only be plausible and justified if one can rationally expect its developers to act responsibly. We could argue that most maintainers have an interest of their own in maintaining a legitimate package. Therefore motives-based theories would find them to be trustworthy (cf. Section 5.2). We can also argue that since OSS is often developed in a community socially interacting with one another (remotely), the maintainers and contributors are subject to normative expectations as outlined in Section 5.2. Therefore non-motives-based theories would also find OSS to be trustworthy (cf. Section 5.2).

On the other hand, we saw in the previous Section 5.3 that there are several reasons why a developer might not act responsibly, and thus might not be trustworthy. So one might be optimistic that the maintainers of a OSS package act responsibly and thus are trustworthy, therefore making it plausible to trust the package. But it can not always be justified to trust a package, especially if one does so blindly. In general, one might say that it can be rational to trust OSS as a whole, but one should thoroughly consider for each individual package if one can optimistically expect it to be trustworthy.

6 Ethical Analysis of the Incidents

In Section 4 we discussed the SCAs on colors.js, faker.js, and node-ipc from a technical viewpoint. In this Section, we want to consider the ethical perspective of these incidents. We discuss, whether the behavior of the maintainers, who attacked their own projects, is morally acceptable, and why they might have acted the way they did.

6.1 colors.js and faker.js

Already on November 8, 2020 the developer of colors.js and faker.js, Marak, opened the following issue, essentially a discussion thread, on the GitHub page of faker.js:

faker.js issue #1046: No more free work from Marak—Pay Me or Fork This

Respectfully, I am no longer going to support Fortune 500s (and other smaller sized companies) with my free work. There isn’t much else to say. Take this as an opportunity to send me a six figure yearly contract or fork the project and have someone else work on it.

Source: [18]

On January 8, 2022, the day of the colors.js attack, Marak opened an issue on its GitHub page, mockingly saying:

colors.js issue #285: Zalgo issue with v1.4.44-liberty-2 release

It’s come to our attention that there is a zalgo bug in the v1.4.44-liberty-2 release of colors. Please know we are working right now to fix the situation and will have a resolution shortly.

Source: [19]

He, later on, posts multiple comments cynically pretending to not know where the bug came from or how to fix it, saying he had been working on it all night [19]. In one comment he even asks other maintainers to fix the bug, knowing they do not have access to the project [32].

Although OSS increasingly receives commercial adoption (cf. Section 2.2), it is often still developed by volunteers who do not receive any compensation [24]. Such volunteers “increasingly report stress and even burnout” [24]. Marak’s complaint in 2020 and the way he mocked his community in the days following the incident are hints of him being stressed and frustrated due to the lack of compensation. One contributor replied to Marak, that he is harming other OSS developers with his actions, rather than only the big corporations. The internet may have blurred his sense of whom he hurt with his behavior, as explained in Section 5.3.

But we need to classify these two incidents separately. That Marak removed all source code from faker.js is arguably morally acceptable, since it raised awareness of his situation without harming others (cf. Section 4.1), and thus is in line with Hans Jonas’ imperative (Proposition 1) and engineering ethics codes (Proposition 2). The attack on colors.js on the other hand is not morally acceptable. While the circumstances that lead Marak to perform the attack are quite relatable, they do not justify it, e.g. the infinite loop in colors.js, could have potentially harmed systems belonging to critical infrastructure, and thus harm other people. This attack is thus morally unacceptable according to Propositions 1 and 2. Furthermore, mocking the people who trusted him, is not morally acceptable. Marak could have just stopped working on both packages and informed its users, that they are no longer maintained.

6.2 node-ipc

Shortly after the maintainer of node-ipc, RIAEvangelist, added the peacenotwar package as a dependency (cf. Section 4.2), a contributor opened an issue on the GitHub page of node-ipc, asking RIAEvangelist to “Remove the ‘peacenotwar’ module” [27], further stating “Don’t get me wrong, I’m against all forms of war, but sabotaging people’s dependencies is not the way to protest” [27]. This is a hint that RIAEvangelist did not fulfill the responsibilities his community normatively expected from him. RIAEvangelist responds, trying to justify his actions: “You are free to lock your dependency to a version that does not include this until something happens with the war, like it turns into WWIII and more of us wish that we had done something about it, or ends and this gets removed” [27]. He later says: “I’m going to tag the piece not war module as protestware just to make it explicitly clear that that’s what it is” [27].

That RIAEvangelist published the peacenotwar package can be morally acceptable, since he clearly stated in the package’s description how the package will behave (cf. Section 4.2). However, adding this package as a dependency to node-ipc, without immediately indicating it, might be considered morally wrong, since this led to the malicious peacenotwar package infecting users of node-ipc without them knowing it. Furthermore he programmed node-ipc to sabotage the users’ files, which is a violation of the users’ privacy and security, and thus morally unacceptable.

We see that in RIAEvangelist’s view, the protest against the Russian invasion of Ukraine was morally more valuable than retaining the trust of his OSS community. But one must ask if there could not have been a better way to protest, as the contributor who initially commented suggested. RIAEvangelist could, for example, have just programmed the package to print a protest message to the console (which he did, cf. Section 4.2), without sabotaging the users’ files. One could say while trying to act especially exemplary by protesting, he in fact acted morally wrong and lost the trust of many people.

7 What We Must Learn

We argued, that it might be plausible to trust OSS, but one cannot do so blindly (c.f. Section 5). The increasing spread of SCAs should be a warning sign, prompting us to employ mitigation strategies, as outlined in Section 3.4. Furthermore, although software reuse is a good practice, one might also ask oneself if it is really necessary to introduce a new OSS dependency even for simple tasks, as this might also increase the attack surface of the own software. And one should critically think about the usage of the auto-update functionalities of dependency managers.

Besides those rather technical strategies, we should also try to prevent SCAs before they even were performed. The fact that in the incidents we discussed, the attacker was the maintainer of the package themself should raise our awareness of the circumstances that led them to their irresponsible behavior. We should ask ourselves, how we as end users might be responsible as well, e.g. for taking advantage of OSS without even considering a donation.

8 Related Work

Ladisa et al. [17] proposed a taxonomy for categorizing SCAs. Ohm et al. [23] reviewed several real-world SCAs on OSS. Zimmermann et al. [34] analyzed security risks in the npm ecosystem in particular. Those works do not analyze the two SCAs we covered in this paper, i.a. due to their recentness. Sharma [30], Tal [31], and Tal and Josef [32] analyze how the two SCAs we discussed unfolded, from a technical viewpoint. All the aforementioned works do not provide an ethical perspective, as this work does. Ferreira et al. [7], Garrett et al. [10], and Sejfia and Schäfer [29] each propose mitigation strategies for SCAs, which we summarized in this work. In his short article, Bellovin [5] mentions several recent SCAs, including the ones we analyzed, but he does not go into detail on how these incidents unfolded. He formulates the question if one can trust OSS developers, which we tried to answer in greater detail. Noorman [21] discusses moral responsibility in computing, but not with a specific focus on SCAs or OSS.

9 Conclusion

We saw that SCAs are a major cybersecurity threat nowadays [6]. Due to the many dependencies, an average OSS package has, an attack on a single package can spread to thousands of other packages that directly or transitively depend on it. Dependency managers play a significant role in such attacks, as they make it easy to include many (micro-)packages and can automatically download updates of packages, which could potentially be malicious [10, 12, 34]. We have seen, that it is impractical to manually review all packages and each update of them [17, 29, 34]. We discussed that machine-learning-based systems can, with some confidence, flag malicious packages [10, 29] and that it is advisable to sandbox packages, like e.g. it is common practice with mobile apps [7]. We discussed the colors.js, faker.js and node-ipc incidents in the npm ecosystem, that recently gained attention because the maintainers themselves attacked their own OSS packages. We concluded that the faker.js incident does not suffice the criteria for being an SCA, but the colors.js and node-ipc incidents do so.

We introduced the fundamentals of trust and responsibility in technology ethics and asked ourselves, whether a maintainer is responsible for the integrity of their package. We concluded, that a developer might not be responsible for actively maintaining his package, but if they do so or pretend to do so, they are in fact responsible for its integrity. We considered that the sense of responsibility a developer feels might be reduced by many factors, among others, by the temporal and/or physical distance between their actions and the resulting consequences, the anonymity on the internet, personal circumstances like stress, or hierarchical, economic, or time pressures [5, 21]. Furthermore, we discussed if it is rational to trust OSS. We saw that trusting OSS means trusting contributors and especially its maintainers to act responsibly. Therefore such a trust cannot be well-grounded, but it can be plausible and sometimes even justified. We, therefore, concluded that it can be rational to trust OSS, but one cannot do so blindly and needs to consider each package individually.

We then returned to the recent incidents and analyzed if the behavior of the maintainers is morally acceptable. We concluded, that it is morally acceptable to just stop maintaining a package and it might even be acceptable to completely delete the package, as was done in the faker.js incident. However, the behavior of the maintainers in the colors.js and node-ipc incidents is not morally acceptable. While their circumstances and motives might be quite relatable, they do not justify the attacks, that affected many (mostly) innocent people.

We finally drew the conclusion, that one should apply SCA mitigation strategies. One should also consider if one needs to introduce a new dependency even for small tasks, and be cautious with the auto-updating functionalities of dependency managers. Furthermore as a user of OSS packages, one should consider donating to the mostly unsalaried volunteers maintaining them.

10 References

- [1]

-

GNU Free Software Foundation (GNU). What is free software? - GNU Project - Free Software Foundation. url: https://www.gnu.org/philosophy/free-sw.en.html (visited on 05/24/2022).

- [2]

-

Open Source Initiative (OSI). The Open Source Definition. url: https://opensource.org/docs/osd (visited on 05/24/2022).

- [3]

-

Morten Andersen-Gott, Gheorghita Ghinea, and Bendik Bygstad. “Why do commercial companies contribute to open source software?” In: International Journal of Information Management 32.2 (2012), pp. 106–117. issn: 0268-4012. doi: https : / / doi . org / 10 . 1016 / j . ijinfomgt . 2011 . 10 . 003. url: https://www.sciencedirect.com/science/article/pii/S026840121100123X.

- [4]

-

sturman anvaka AsaAyers. npmgraph.an - Visualization of NPM dependencies. url: https://npm.anvaka.com/ (visited on 06/20/2022).

- [5]

-

Steven M. Bellovin. “Open Source and Trust”. In: IEEE Security Privacy 20.2 (Mar. 2022), pp. 107–108. issn: 1558-4046. doi: 10.1109/MSEC.2022.3142464.

- [6]

-

ENISA. Enisa Threat Landscape 2021. Oct. 2021. url: https://www.enisa.europa.eu/publications/enisa-threat-landscape-2021 (visited on 06/18/2022).

- [7]

-

Gabriel Ferreira et al. “Containing Malicious Package Updates in npm with a Lightweight Permission System”. In: 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE). 2021, pp. 1334–1346. doi: 10.1109/ICSE43902. 2021.00121. url: https://ieeexplore.ieee.org/abstract/document/9402108 (visited on 06/18/2022).

- [8]

-

Maarten Franssen, Gert-Jan Lokhorst, and Ibo van de Poel. “Philosophy of Technology”. In: The Stanford Encyclopedia of Philosophy. Ed. by Edward N. Zalta. Fall 2018. Metaphysics Research Lab, Stanford University, 2018. url: https://plato.stanford.edu/archives/fall2018/entries/technology/ (visited on 06/18/2022).

- [9]

-

Alfonso Fuggetta. “Open source software––an evaluation”. In: Journal of Systems and Software 66.1 (2003), pp. 77–90. issn: 0164-1212. doi: 10.1016/S0164-1212(02)00065-1. url: https://www.sciencedirect.com/science/article/pii/S0164121202000651 (visited on 06/18/2022).

- [10]

-

Kalil Garrett et al. “Detecting Suspicious Package Updates”. In: 2019 IEEE/ACM 41st International Conference on Software Engineering: New Ideas and Emerging Results (ICSE-NIER). 2019, pp. 13–16. doi: 10.1109/ICSE-NIER.2019.00012. url: https://ieeexplore.ieee.org/abstract/document/8805698 (visited on 06/18/2022).

- [11]

-

Armin Grunwald. Handbuch Technikethik. de. Ed. by Armin Grunwald. J.B. Metzler, Aug. 2013. isbn: 978-3-476-02443-5.

- [12]

-

Tomas Gustavsson. “Managing the Open Source Dependency”. In: Computer 53.2 (2020), pp. 83–87. doi: 10.1109/MC.2019.2955869.

- [13]

-

S.A. Hissam, D. Plakosh, and C. Weinstock. “Trust and vulnerability in open source software”. English. In: IEE Proceedings - Software 149 (1 Feb. 2002), 47–51(4). issn: 1462-5970. url: http://www.jstor.org/stable/24436821 (visited on 05/06/2022).

- [14]

-

Sonatype Inc. SONATYPE’s 2021 State of the software supply chain. July 2021. url: https://www.sonatype.com/resources/state-of-the-software-supply-chain-2021 (visited on 05/18/2022).

- [15]

-

Brent Jesiek. “Democratizing software: Open source, the hacker ethic, and beyond”. In: First Monday 8.10 (Oct. 2003). doi: 10 . 5210 / fm . v8i10 . 1082. url: https://firstmonday.org/ojs/index.php/fm/article/view/1082/1002 (visited on 06/18/2022).

- [16]

-

Hans Jonas. Das Prinzip Verantwortung. de. 6th ed. suhrkamp taschenbuch. Berlin, Germany: Suhrkamp, Apr. 2003. isbn: 978-3-518-39992-7.

- [17]

-

Piergiorgio Ladisa et al. Taxonomy of Attacks on Open-Source Software Supply Chains. 2022. doi: 10.48550/ARXIV.2204.04008. arXiv: 2204.04008.

- [18]

-

Marak. No more free work from Marak - pay me or fork this - issue #1046 - Marak/Faker.js. Nov. 2020. url: http://web.archive.org/web/20210704022108/https://github.com/Marak/faker.js/issues/1046 (visited on 06/18/2022).

- [19]

-

Marak. Zalgo issue with v1.4.44-liberty-2 release - issue #285 - Marak/colors.js. Jan. 8, 2022. url: https://web.archive.org/web/20220320201334/https://github.com/Marak/colors.js/issues/285 (visited on 03/15/2022).

- [20]

-

Carolyn McLeod. “Trust”. In: The Stanford Encyclopedia of Philosophy. Ed. by Edward N. Zalta. Fall 2021. Metaphysics Research Lab, Stanford University, 2021. url: https://plato.stanford.edu/archives/fall2021/entries/trust/ (visited on 06/18/2022).

- [21]

-

Merel Noorman. “Computing and Moral Responsibility”. In: The Stanford Encyclopedia of Philosophy. Ed. by Edward N. Zalta. Spring 2020. Metaphysics Research Lab, Stanford University, 2020. url: https://plato.stanford.edu/archives/spr2020/entries/computing-responsibility/ (visited on 06/18/2022).

- [22]

-

npm. About semantic versioning. url: https://docs.npmjs.com/about-semantic-versioning (visited on 05/28/2022).

- [23]

-

Marc Ohm et al. “Backstabber’s Knife Collection: A Review of Open Source Software Supply Chain Attacks”. In: Detection of Intrusions and Malware, and Vulnerability Assessment. Ed. by Clémentine Maurice et al. Cham: Springer International Publishing, 2020, pp. 23–43. isbn: 978-3-030-52683-2.

- [24]

-

Cassandra Overney. “Hanging by the Thread: An Empirical Study of Donations in Open Source”. In: Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering: Companion Proceedings. New York, NY, USA: Association for Computing Machinery, 2020, pp. 131–133. isbn: 9781450371223. doi: 10.1145/ 3377812.3382170.

- [25]

-

Pierluigi Paganini. Node-IPC NPM package sabotage to protest Ukraine invasion. Mar. 2022. url: https://securityaffairs.co/wordpress/129174/hacking/node-ipc-npm-package-sabotage.html (visited on 06/18/2022).

- [26]

-

Eric Raymond. “The cathedral and the bazaar”. In: Knowledge, Technology & Policy 12.3 (1999), pp. 23–49.

- [27]

-

RIAEvangelist. issue #233 - RIAEvangelist / node-ipc. Mar. 9, 2022. url: https://web.archive.org/web/20220315161016/https://github.com/RIAEvangelist/node-ipc/issues/233 (visited on 03/15/2022).

- [28]

-

RIAEvangelist. peacenotwar. url: https://web.archive.org/web/20220330234320/https://github.com/RIAEvangelist/peacenotwar (visited on 06/01/2022).

- [29]

-

Adriana Sejfia and Max Schäfer. “Practical Automated Detection of Malicious npm Packages”. In: (2022). doi: 10.1145/3510003.3510104. arXiv: 2202.13953.

- [30]

-

Ax Sharma. npm Libraries ‘colors’ and ‘faker’ Sabotaged in Protest by their Maintainer—What to do Now? 2022. url: https://blog.sonatype.com/npm-libraries-colors-and-faker-sabotaged-in-protest-by-their-maintainer-what-to-do-now (visited on 05/06/2022).

- [31]

-

Liran Tal. Alert: peacenotwar module sabotages npm developers in the node-ipc package to protest the invasion of Ukraine. 2022. url: https://snyk.io/blog/peacenotwar-malicious-npm-node-ipc-package-vulnerability/ (visited on 05/06/2022).

- [32]

-

Liran Tal and Assaf Ben Josef. Open source maintainer pulls the plug on npm packages colors and faker, now what? Jan. 9, 2022. url: https://snyk.io/blog/open-source-npm-packages-colors-faker/ (visited on 05/19/2022).

- [33]

-

Margaret Walker and Urban. In: Moral Repair: Reconstructing Moral Relations After Wrongdoing. Cambridge: Cambridge University Press, 2006.

- [34]

-

Markus Zimmermann et al. “Small World with High Risks: A Study of Security Threats in the npm Ecosystem”. In: 28th USENIX Security Symposium (USENIX Security 19). Santa Clara, CA: USENIX Association, Aug. 2019, pp. 995–1010. isbn: 978-1-939133-06-9. url: https://www.usenix.org/conference/usenixsecurity19/presentation/zimmerman (visited on 06/18/2022).